Frictionless Spinning in the Void: AI is Not 'There'

More than ever, we need to bring into focus and nurture those aspects of being human that escape notice when we focus on our calculating, rationalizing, and cognizing abilities.

Lately, I've been on a mini "philosophy tour," traveling around giving a talk about AI called "Frictionless Spinning in the Void: AI is not 'There'."

Last week I presented the talk at Bombay Beach Institute (BBI) in the uncanny little town of Bombay Beach, CA. The BBI is cofounded by Tao Ruspoli who directed the movie based on Hubert Dreyfus's interpretation of Heidegger: Being in the World. This remains one of the best philosophical documentaries I have ever seen and I highly recommend it. While there in Bombay Beach, Tao also interviewed me about philosophy and AI for his podcast of the same name (Being in the World). When that is released, I will share the link here.

This title may suggest this is an "anti-AI" talk, but it's not. Current AI technologies like large language model (LLM) chatbots are wondrous creations. Talking libraries! Linguistic automata! What a weird and fascinating time to be alive.

It is still far from clear what new possibilities will open and close given the emergence of LLMs. We should all take part in experimenting and developing new ways to engage with the world through these tools.

But how are these technologies shaping our understanding of ourselves?

I am concerned that today's AI conversation often takes for granted an exceedingly narrow conception of being human. Researchers and devotees of today's AI often simply assume that the essential features of human intelligence can be abstracted away both from our embodied immersion in the world and the grip of what we care about.

What kind of human beings will become if we live in light of these assumptions?

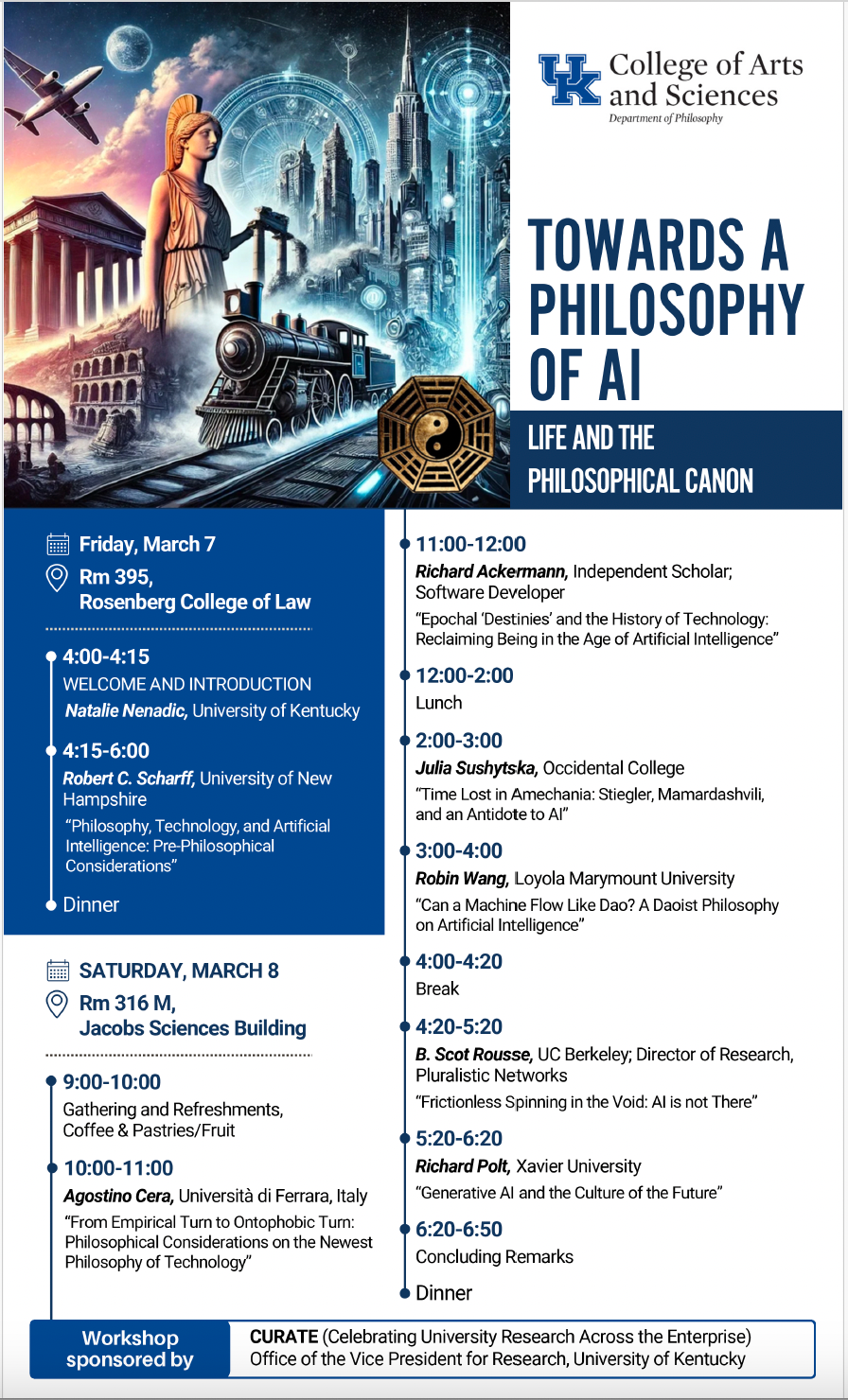

This weekend, I am in Lexington to present the talk at a philosophy of AI conference at the University of Kentucky:

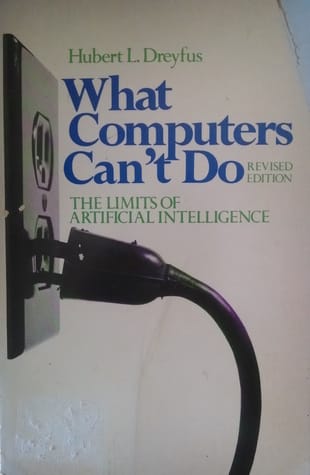

Human intelligence, whatever that is, is naturally held up as the benchmark for evaluating artificial intelligence, whatever that turns out to be. But as Hubert Dreyfus started pointing out as far back as his 1965 report for the RAND Corporation, "Alchemy and Artificial Intelligence," researchers in AI often operate with an artificially rationalized and disembodied conception of human being-in-the-world. This remains the case even if the symbolic, rules-based AI that was the target of Dreyfus's critique has been replaced by the neural network approach.

In the contemporary AI conversation, intelligence is assumed to be nothing but a "frictionless spinning in the void." LLM chatbots simulate human intelligence but without having any actual embodied presence in the world. Tech CEOs and their hype-hungry reporters in the media assure us that this fact simply doesn't matter.

Hence, the recent ubiquity of a deeply suspect distinction between "cognitive" and "non-cognitive" tasks. For an example of how obvious and non-problematic this distinction is currently assumed to be, notice an NYTimes opinion piece posted this week.

In "The Government Knows AGI is Coming," Ezra Klein predicts the emergence of artificial general intelligence (AGI) within two or three years. How do Klein and his guest define AGI?

- Transformational artificial intelligence capable of doing basically anything a human being could do behind a computer — but better. (Ezra Klein)

- A system capable of doing almost any cognitive task a human can do. (Ben Buchanan, Klein's guest on March 4)

What are cognitive tasks? Presumably those activities requiring neither embodied presence nor care. In this disembodied, detached realm computers will soon be able to take over from humans (or so the story goes).

Non-cognitive tasks would be those that require both embodied presence and care. Think of the care-giving professions like childcare, nursing, and eldercare. Such are the tasks that we temporarily took to calling "essential work" during the pandemic. These are currently not advertised as being susceptible to any imminent AI take-over. But why call these skills non-cognitive? This assumes our cognition swings free from our care.

Again, my worry is that researchers, CEOs, and talking heads assume human intelligence can be separated from embodiment and care, as if this were obvious—and that most people accept it without question: “Of course 'human-level intelligence' is completely abstractable from embodied presence and care!”

We don't even bother to think this thought. We just accept it as true. What kind of human beings do we make ourselves into when we accept this? What kind of technologies will we make? How will we relate to the world?

Hubert Dreyfus's prescient warning at the end of his 1972 book What Computers Can’t Do (the book-length version of his 1965 RAND Corporation report) remains as pressing ever:

“People have begun to think of themselves as objects able to fit into the inflexible calculations of disembodied machines: machines for which the human form-of-life must be analyzed into meaningless facts, rather than a field of concern organized by sensory-motor skills. Our risk is not the advent of superintelligent computers, but of subintelligent human beings.”

This danger continues to haunt us. More than ever, we need to bring into focus and nurture those aspects of being human that escape notice when we focus only on our calculating, reckoning, rationalizing, and cognizing abilities.

Our intelligence is not a free-floating capacity to work remotely and solve narrowly-defined cognitive problems. Our way of being intelligent and "having" or living in language are intimately and holistically connected to our embodied caring and being in the world.

We are "there" in our everyday familiar world not just through objective coordinates that can be algorithmically formalized, but through our active tending to what matters, primordially indexed to our particular mortal body.

It is on this score that Heidegger and the tradition of Heidegger-influenced AI-critique remain deeply relevant. My talk continues my reactualization of this tradition in today's context. I will share videos of the talk when they appear on YouTube.

What questions, thoughts, observations, or perplexities does all of this bring up for you? Let me know in the comments or by sending me a message!

Join the Conversation

Your support makes Without Why possible. If you upgrade to a paid subscription for as low as $8 per month you gain the opportunity to post and reply to comments on these pages, and to join a growing community of conversation here. You will also receive extra-special members-only posts. Sign up today!